For 2026, I've decided to use this space to teach everything I know about content engineering + AI search so far.

Maybe you, too, feel like you're getting a firehose of information about new developments, tools, and AI use cases. It's too much! I'm going to help you step away from that firehose and give you a proverbial glass of water instead, with a weekly breakdown of AI content-related lessons that you can get through in 10 minutes or less. ✨

Let's go deep on one topic each week so you can walk away feeling like you gleaned some *useful* information you can put into practice. I figure...I'm down in the weeds with it every day as I run my business, and might as well share what I'm figuring out.

What makes content citation-worthy to AI (and why it looks nothing like SEO)

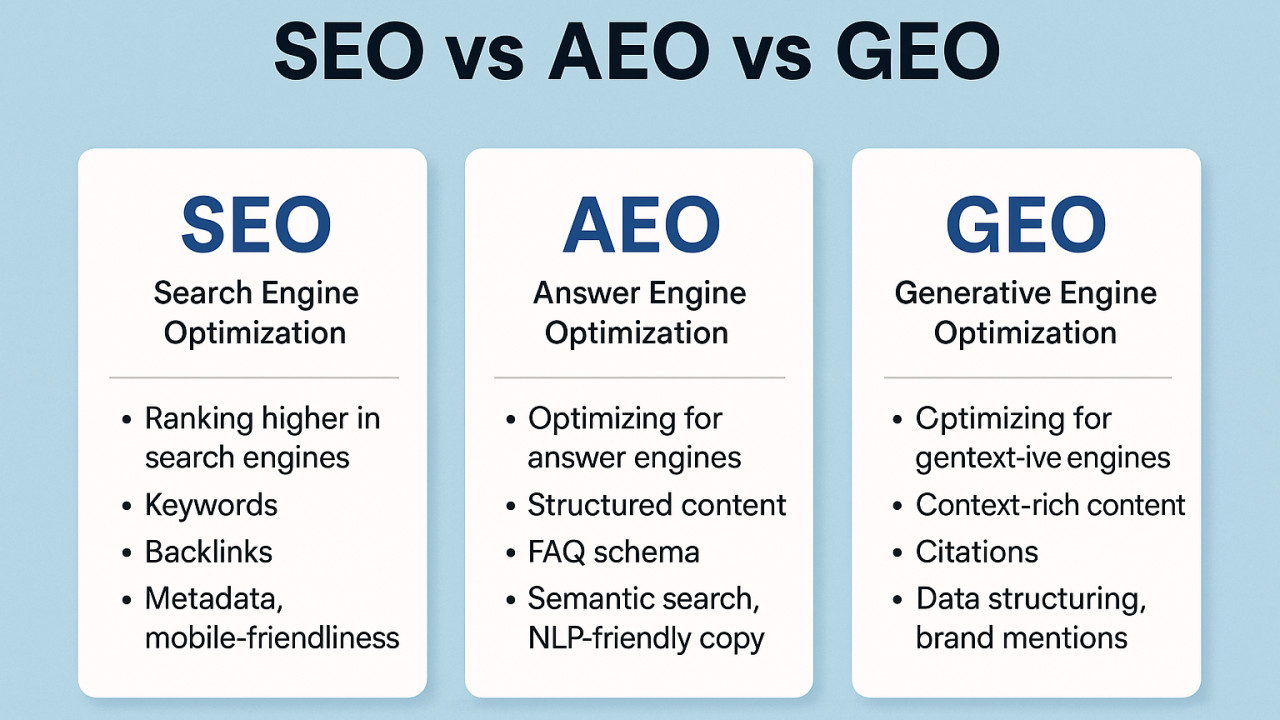

Something I've been wrestling with lately: watching marketers panic about AI search while simultaneously clinging to the same search engine optimization (SEO) playbook that's kept them employed for the past decade. I get it! I, too, initially clung to the “old way” in the face of these scary new tools that could (and in some ways, are) putting a freelance content writer like me out of business.

But here's the uncomfortable truth we need to talk about. The shift from SEO to GEO (Generative Engine Optimization) isn't just a technical evolution. It's a philosophical reorientation of how we think about content, authority, and what it means to be "findable" online. And most marketers? They're not ready.

The New Skills That Actually Matter (And Why You May Not Have Them Yet)

Let's start with the hard stuff, because that's where real growth happens.

The core competency for effective GEO isn't anything you learned in your SEO certification program. It's prompt engineering literacy. And no, I don't mean knowing how to ask ChatGPT to write a blog post for you.

I'm talking about understanding how large language models interpret queries, prioritize sources, and construct responses so you can reverse-engineer what content structures actually get used in those AI summaries you see spit out in Google now at the top of the search results (and beyond.)

This is fundamentally different from keyword research.

You're not looking for search volume and competition metrics. You're trying to understand how an AI tool decides what information is trustworthy enough to cite, recent enough to matter, and clear enough to synthesize into an answer.

This requires you to think like the information retrieval system itself and get curious about Qs like:

When someone asks an LLM about your product category, which sources does it pull?

Why those and not others?

What patterns do those cited sources share?

If you can't answer these questions through systematic testing and analysis, you're not doing GEO. You're just hoping your content shows up in AI responses while crossing your fingers.

The practical application of this means writing in clear, hierarchical formats with direct answers followed by supporting context.

You're using natural language that mirrors how people actually ask questions rather than keyword-stuffed content that sounds like it was written by a robot for robots. (The irony of that approach working for traditional search but failing for AI search is not lost on me.)

What This Actually Looks Like in Practice

My cheat code for doing this well? Start with the ELI5 (explain like I'm 5) approach.

When you're explaining something to a five-year-old, you start with an initial question and then (usually) end up answering many follow-up questions that help them build context on that initial one for a deeper understanding of the concept.

Why ELI5 is Good for LLMs:

Simplification & Clarity: It forces the LLM to strip away technical jargon, making complex topics easier to understand.

Contextual Understanding: It improves the ability to summarize, as the model uses its attention mechanisms to focus on core concepts rather than just predicting words.

Content Generation: It helps create relatable content, such as blog posts or LinkedIn updates, by generating simple explanations and analogies.

Now, this isn't too different from what we've been taught as content marketers: to create high-quality, comprehensive content.

We'd write 2,000-word blog posts rife with variations of our target phrase, build internal links with exact-match anchor text, and optimize our title tags and meta descriptions like our lives depended on it. Keyword optimization was the name of the game.

But that entire playbook? Increasingly irrelevant. AI models don't care about your keyword density.

They're reading your content holistically, trying to understand what you're actually saying and whether you're a credible source worth citing. They're looking for "citation-worthy" assets: things like original research, proprietary data, and third-party expert perspectives that they can confidently reference when constructing answers.

This is why some of the more successful GEO writers right now have a journalism background; it's that same skill set. Developing those assets becomes your competitive moat.

The truth is: You can't fake authority with an AI model the way you could sometimes game Google's algorithm.

You either have genuine expertise and original insights, or you don't. And if you don't, no amount of optimization will help you.

The Measurement Problem Nobody Wants to Talk About

Because of SEO, we're so conditioned to track rankings, click-through rates, and organic sessions that we struggle to conceptualize what success even looks like when those metrics become less relevant.

How do you track brand mentions in AI responses?

How do you measure citation frequency across different query types?

How do you assess whether your brand is being represented accurately in synthesized answers?

These aren't rhetorical questions. They're fundamental ones.

You need tools in place for tracking brand mentions across query variations and LLM platforms.

You need to measure citation accuracy and context, not just whether you got mentioned.

You need to track the share of voice for AI responses versus competitors.

And somehow, you need to connect all of this to actual business outcomes in a way that makes sense to stakeholders who still think in SEO terms. Without these measurement capabilities, you can't prove ROI. And without proving ROI, you'll never get the investment needed to do GEO properly.

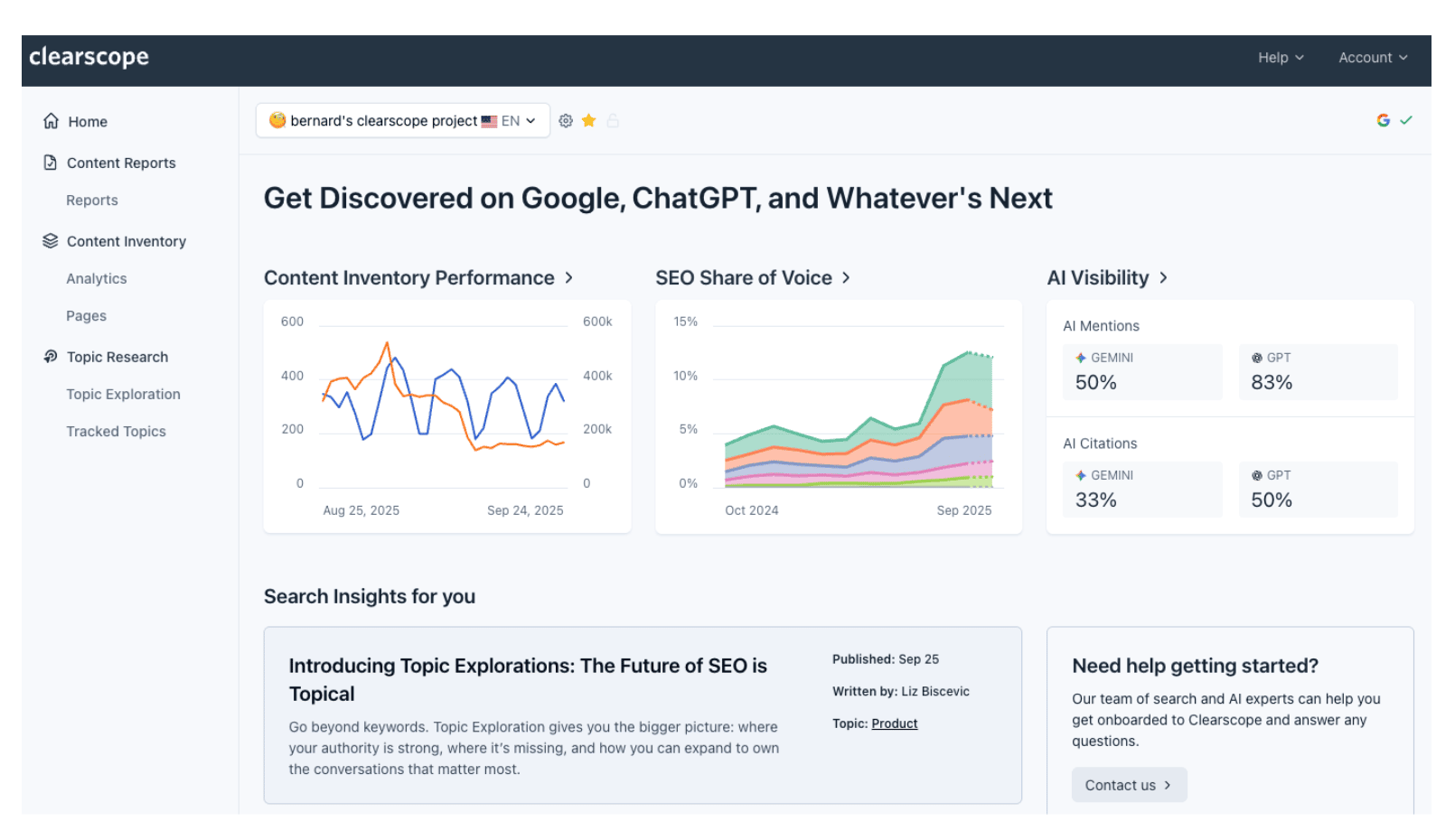

My favorite tool for this right now is Clearscope.

It’s become my go-to for tracking GEO performance because it provides visibility across both traditional search engines and AI-powered platforms like ChatGPT and Gemini.

Unlike traditional SEO tools that only show Google rankings, Clearscope shows exactly which sources LLMs are using to compile their answers, giving you real insight into your AI search performance. This tracking capability lets you keep tabs on all these data sources in a single dashboard, rather than piecing together fragmented data from multiple sources in a crappy spreadsheet.

What Transfers and What Dies: The Honest Autopsy of SEO Skills

Let's talk about what survives the transition from SEO to GEO, because it's not all bad news.

Understanding user intent still matters because AI models want to satisfy the same human needs as search engines do.

Your ability to identify content gaps and recognize what makes content genuinely high-quality becomes even more critical when you can't rely on technical tricks to compensate for mediocre substance.

Your editorial judgment about what makes content credible—that survives.

Technical skills around structured data and making information accessible to machines—those partially transfer, though the emphasis shifts from "making Google's bot happy" to "making information unambiguous for machine comprehension."

But here's what dies: the entire keyword optimization playbook.

Keyword density calculations? Dead. Exact-match anchor text strategies? Irrelevant. Link schemes and all the tricks designed to game ranking algorithms? Counterproductive.

AI models don't have a "position 1" to fight for. They synthesize answers from multiple sources, so the zero-sum ranking game mentality that defined SEO competition stops making sense.

Traditional on-page SEO tactics like keyword placement in specific HTML elements matter far less when LLMs read content holistically rather than weighing title tags and H1s. The entire mindset of "optimizing for the algorithm" rather than the end user doesn't work because LLMs are specifically designed to cut through optimization tricks and surface genuinely helpful information.

The Three Non-Negotiables for Actually Learning This GEO Stuff

If you're serious about building GEO competency (whether for yourself or for a team you're training), there are three topics you cannot skip.

These aren't nice-to-haves. They're the foundation that determines whether you're doing real GEO work or just cosplaying as an AI-savvy marketer.

1. LLM information retrieval mechanics.

This is the price of entry. You need to understand how language models actually retrieve, evaluate, and synthesize information.

What makes a source "citation-worthy" in an AI's decision tree?

How do recency versus authority trade-offs work?

How do models handle conflicting information?

What triggers a brand mention versus a generic category description?

Without this foundational understanding, you're just guessing. You need practical frameworks for reverse-engineering, which content structures get pulled into responses across different query types (like informational, comparative, transactional).

You also need methods for auditing your brand's current "AI visibility" across major LLM platforms. (Again, my vote is for Clearscope, but there are *so many* great new tools for this popping up every day.)

This isn't theoretical knowledge. It's about teaching yourself (or your team) to think like the retrieval system.

2. Content architecture for machine comprehension.

You need tactical training on structuring content so AI models can confidently parse, understand, and cite it. This means moving beyond the vague directive to "write good content" and learning specific techniques, such as:

Schema markup (this has to do with how the LLMs read your site's HTML)

Building comparative frameworks that position your brand within category context

Developing "synthesis-friendly" formats like structured comparisons, data tables, and definitional content that AI models can easily work with

Before-and-after examples showing how restructuring existing content increases citation rates.

Templates for creating net-new assets specifically designed for AI retrieval (like original research formats, expert POV pieces, and technical documentation structures).

This is where craft meets strategy, and it's where most marketers get lazy because it requires actual work rather than just following a checklist.

3. Measurement and attribution frameworks for AI visibility.

This is where most marketers will flounder, because we don't yet have established industry standards for measuring success in this new wild, wild west of GEO.

Traditional metrics like rankings and click-through rates aren't as meaningful in this context.

However, this is where you need to shift into data scientist mode.

Systematically test brand mentions across query variations and LLM platforms. Measure citation accuracy and context. Track share-of-voice in AI responses versus competitors.

From there, you can start connecting AI visibility to actual business outcomes.

You need dashboards, tracking methodologies, and reporting frameworks that prove ROI to stakeholders who still think in SEO terms.

Without measurement, GEO becomes yet another "emerging channel" that leadership doesn’t understand.

The Uncomfortable Truth About Where We Are Right Now

We're in the messy middle of a shift in how information gets discovered and consumed.

The skills gap is real. The pressure to show ROI while the industry figures out what success even looks like is intense. And there's no clear path forward because we're literally creating the discipline in real-time.

But that's also what makes this moment so interesting. We're not bound by established best practices or industry consensus about "the right way" to do things. We get to experiment, test, learn, and build frameworks that might define how GEO works for the next decade.

The marketers who thrive in this transition will be those who embrace uncertainty, develop genuine curiosity about how these systems actually work, and build competency through experimentation.

This is hard work. It requires intellectual humility to admit what you don't know and creative problem-solving to navigate all this new information and apply it to your work. But if you're still reading this, you're probably not looking for easy.

I'm glad you're here. Let's keep learning together :)